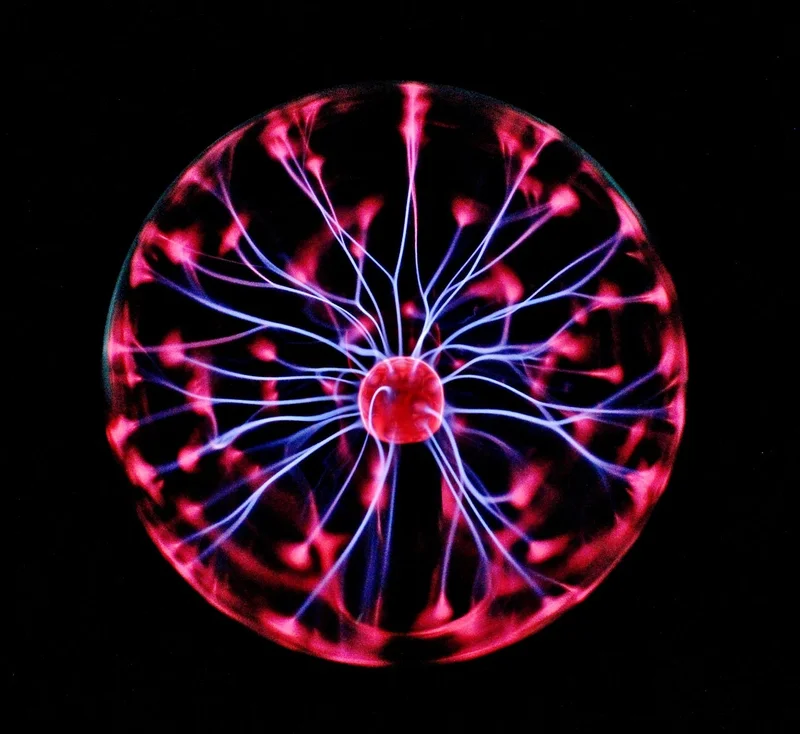

For decades, the promise of fusion energy has felt like a shimmering mirage on the horizon—the ultimate prize of clean, limitless power, always just out of reach. We’ve been trying to bottle a star, to tame the very process that fuels our sun right here on Earth. The biggest hurdle? The fuel itself. We’re not talking about the blood plasma you can donate at a local plasma center; this is the fourth state of matter, a chaotic, superheated soup of ions and electrons that writhes and bucks against our control with unimaginable force.

Controlling this stellar fire is like trying to hold lightning in a bottle. Our best tools, our most advanced sensors, have always had blind spots. They give us snapshots, glimpses into the maelstrom, but the full picture has remained infuriatingly elusive. We could see the storm, but we couldn't predict the gusts that could tear our machine apart.

Until now.

When I first read the Nature Communications paper from the team at Princeton, I honestly just sat back in my chair, speechless. They haven’t just built a better sensor. They’ve built an AI that can see what our sensors can’t. This is the kind of breakthrough that reminds me why I got into this field in the first place.

A Ghost in the Machine

Imagine trying to understand an orchestra by only listening to the first violin. You can get the melody, the tempo, but you’re missing the harmony, the thunder of the percussion, the soul of the cello. You know there’s more there, but you can’t hear it. Our fusion diagnostics—the sensors we use to monitor the plasma—have been in a similar bind.

One of the most critical techniques is called Thomson scattering. It’s brilliant, but it’s not fast enough. It takes measurements, but in the fractions of a second between those measurements, violent instabilities can bubble up and threaten the entire reaction. These are called edge-localized modes, or ELMs—to put it simply, they’re like tiny, violent solar flares inside the reactor that can damage the machine's walls. For years, containing them has been one of fusion’s holy grails.

This is where the Princeton team’s AI, called Diag2Diag, changes the game. They fed it data from a whole suite of different sensors, letting it learn the deep, hidden relationships between all the different signals. The AI began to understand the full symphony. Now, it can take the limited data from one diagnostic and generate a synthetic, ultra-high-resolution version of what another diagnostic would have seen, filling in the gaps with staggering accuracy. It’s like an artist who can paint a photorealistic landscape after seeing only a few key brushstrokes.

This isn't just an upgrade; it's a paradigm shift. For the first time, we have a way to see the fine-grained details of the plasma’s edge—the most critical and turbulent region—in real-time. The AI’s reconstructed data was so detailed, in fact, that it provided powerful new evidence for a leading theory about how to suppress those dangerous ELMs, something involving the creation of tiny “magnetic islands” that calm the plasma down. This is absolutely huge—it means we’re not just guessing anymore, we’re seeing the physics in action, we’re understanding the mechanism and that understanding is the first giant leap toward true control. Princeton’s Clever AI Just Solved One of Fusion Power’s Biggest Problems

We've Given Our Instruments a New Sense

Let's step back for a second and think about what this really means. This breakthrough is so much bigger than just fusion. We have created a tool that can perceive a reality that is physically invisible to our best instruments.

Think about the invention of the microscope. Suddenly, a drop of pond water wasn't just water; it was a universe teeming with life. Or the telescope, which turned the night sky from a canvas of faint lights into a breathtaking cosmos of galaxies and nebulae. These tools didn't change reality; they expanded our ability to perceive it. Diag2Diag is the 21st-century version of that leap. It’s a computational microscope for complex systems.

The researchers themselves note it could be used to enhance data from failing sensors on spacecraft or to add a layer of impossibly detailed feedback to robotic surgery. But where else could we point this new "eye"? Could we use it to predict financial market crashes by seeing the invisible turbulence that precedes them? Or forecast the complex weather patterns that spawn catastrophic storms by filling in the gaps between our satellite and ground sensor data?

Of course, with this power comes a profound responsibility. We are beginning to rely on an AI’s interpretation of reality, a reality we ourselves cannot directly observe. We must proceed with both excitement and caution, ensuring we understand the models we are building. How do we verify an insight that no human or physical tool can confirm? That’s a question we’ll have to answer as we venture further into this new territory.

But the path forward is electrifying. We are on the cusp of moving fusion energy from the realm of perpetual experiment to a source of reliable, 24/7 power. By using AI to make our reactors smarter, more compact, and more economical, we’re clearing some of the final, most stubborn hurdles. We are teaching our machines not just to calculate, but to infer, to intuit, to see. And in doing so, we’re giving ourselves a new way to see the future.

We Just Taught a Machine to See the Impossible

This is more than just a clever algorithm. It’s a fundamental change in the relationship between data and reality. We’ve spent centuries building better and better instruments to measure the world. Now, we're building intelligence that can look at the imperfect data from those instruments and perceive the perfect truth hidden within. We’re not just solving one of fusion’s biggest problems; we’re opening a door to a new era of discovery, one where the limits of our perception are no longer defined by the physical world, but by the power of our ingenuity. The future isn't just something we're building; it's something we're finally beginning to see clearly.